Introduction

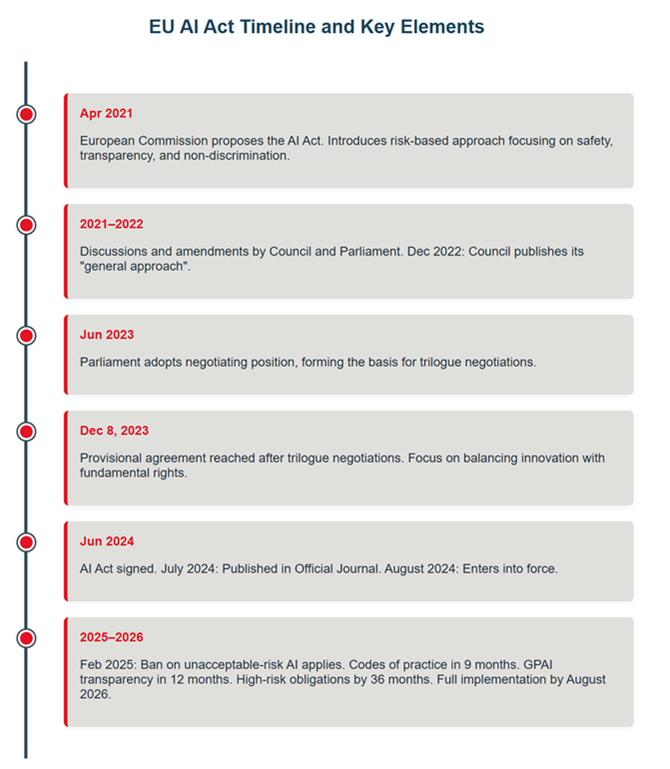

The European Union’s Artificial Intelligence Act (EU AI Act) represents the world’s first binding, comprehensive regulation on artificial intelligence. It was formally adopted in June 2024, entering into force that summer with phased obligations over 24–36 months. This legislation establishes a robust framework to ensure that AI systems developed, deployed, and used within the EU adhere to strict measures designed to protect fundamental rights, promote innovation, and safeguard public safety.

By categorising AI applications into four risk levels, unacceptable, high, limited, and minimal, the Act not only shapes the pathway toward trustworthy and human-centric AI technologies but also serves as a benchmark for global AI governance. The transformative potential of AI across multiple sectors, from business and critical infrastructure to retail, telecare, healthcare, automotive, and security systems, makes it imperative for business leaders and industry stakeholders to understand, prepare for, and comply with these regulatory requirements.

It is important to remember, however, that:

- Some rules started in 2024

- Most obligations for high-risk systems phase in over 24–36 months

- Providers will have transition time to comply

- High-risk systems will require continuous post-market monitoring as an explicit legal requirement under the Act

This article therefore examines the key elements of the EU AI Act and explores its implications for businesses and critical sectors. By drawing on insights from:

- several academic and policy sources, including recent editorials on the regulatory framework,

- further analyses of its impact on financial regulation and human rights,

- and comprehensive overviews of the Act,

this discussion provides an in-depth view of how the regulation will shape future AI development in Europe.

Key Provisions of the EU AI Act

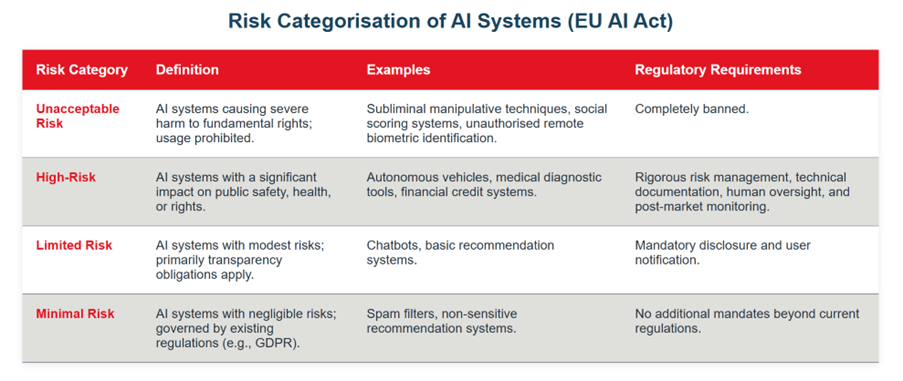

The EU AI Act employs a risk-based approach to regulate the use of artificial intelligence. This approach distinguishes between AI systems by evaluating the potential harm they may inflict on individual rights, public safety, and overall societal wellbeing. The major risk categories outlined in the Act are as follows:

- Unacceptable Risk: AI systems that present an unacceptable risk are outright prohibited. These include AI systems employing manipulative subliminal techniques, exploiting vulnerable groups, and engaging in practices such as social scoring or unauthorised real-time remote biometric identification in public spaces. The stringent prohibition is designed to forestall any application of AI that could fundamentally undermine human dignity or democratic principles.

- High-Risk AI Systems: AI systems deemed high risk are permitted under strict conditions. These systems, which can have significant implications for safety and fundamental rights, must comply with rigorous requirements including detailed risk management protocols, robust data governance, technical documentation, human oversight, rigorous testing, and post-market surveillance. High-risk AI systems often include those integrated into critical national infrastructure, health-care devices, autonomous vehicles, and critical financial applications.

- Limited Risk AI Systems: AI systems that present limited risks, such as chatbots or basic user interaction tools, are subject to transparency obligations. For example, users must be explicitly informed when they are interacting with an AI system. This ensures that even if the risk is minimal, there is clarity and trust between the service provider and the user.

- Minimal Risk AI Systems: AI systems that pose minimal or negligible risk are largely exempt from additional obligations beyond existing legislation (such as the GDPR). These systems include, for example, some spam filters and recommendation engines that do not involve sensitive personal data.

The Act also supports innovation through regulatory sandboxes, helping SMEs test AI systems under supervised conditions.

Table: Risk-Based Classification of AI Systems under the EU AI Act

A visual summary of the risk-based classification is provided in the table below.

| Risk Category | Definition | Examples | Regulatory Requirements |

| Unacceptable Risk | AI systems with a threat to human dignity and fundamental rights; prohibited from use. | Subliminal manipulation, social scoring, unauthorised remote biometric identification. | Banned outright; zero tolerance rule. |

| High-Risk | AI systems with potential detrimental impact on safety, health, or fundamental rights. | Autonomous driving systems, healthcare diagnostic tools, financial credit assessments. | Mandatory risk management frameworks, conformity assessments, human oversight, post-market monitoring. |

| Limited Risk | AI systems with modest potential risks; subject mainly to transparency obligations. | Chatbots, basic customer interface systems. | Disclosure requirements to ensure user awareness. |

| Minimal Risk | AI systems with minimal or no risk; fall under existing legislative controls (e.g., GDPR). | Basic recommendation engines, spam filters. | No additional obligations beyond current regulations. |

The risk categorisation under the Act ensures that regulatory efforts are aligned with the potential dangers and real-world applications of AI technologies. The Act’s emphasis on transparency and accountability, particularly for high-risk systems, underscores the EU’s commitment to harnessing technological innovation while protecting fundamental societal values.

Implications for Businesses

Businesses operating within and beyond the EU market are directly impacted by the new regulatory framework. The EU AI Act necessitates that companies adopt a comprehensive internal compliance strategy to categorically assess their AI systems by risk level and ensure that all high-risk systems meet the stringent obligations mandated by the legislation.

Key Implications Include:

- Categorisation and Documentation: Business leaders must categorise their AI systems accurately based on the defined risk levels. For high-risk systems, comprehensive technical documentation, detailed risk management protocols, and continuous monitoring are mandatory. As noted by financial and regulatory analysts, sectors such as banking already require additional layers of compliance that will now extend to all AI deployments.

- Enhanced Transparency: For limited-risk AI systems, providers must clearly inform customers that interaction is with an AI system. Transparency measures, such as watermarking of AI-generated content, are required to ensure consumers can distinguish between human-made and machine-generated outputs. Providers placing AI-generated or manipulated content (e.g. deepfakes) on the market must ensure clear labelling to inform users.

- Interoperability and Data Governance: High-risk AI systems must adhere to robust data governance frameworks. This includes maintaining data quality and security, which may require substantial investments in technical infrastructure and training. The obligation for cross-border data flows necessitates that companies adjust their operational procedures to align with both EU standards and existing national regulations.

- Sector-Specific Concerns: Industries that integrate AI into critical operations, such as the financial sector, require additional verification and supervisory measures. For example, banks must ensure that AI systems used in creditworthiness assessments comply with both the EU AI Act and established financial regulation frameworks.

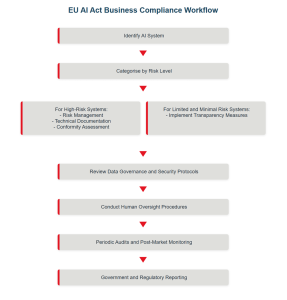

Flowchart: Business Compliance Workflow for the EU AI Act

The following flowchart illustrates the internal process that businesses may adopt to ensure compliance with the EU AI Act:

This compliance workflow underscores the multifaceted requirements that companies must negotiate. SMEs, in particular, may face significant challenges related to resource allocation and technical expertise. Therefore, early strategic planning and investment in compliance infrastructures are critical for maintaining competitive advantage and avoiding regulatory penalties.

Impact on Critical National Infrastructure

Critical National Infrastructure (CNI) is fundamental to a nation’s public safety and economic stability. The integration of AI systems into such infrastructures, spanning energy grids, transportation networks, water treatment facilities, and many more types of systems and networks, places them predominantly under the high-risk category as defined by the EU AI Act. CNI planners therefore need to consider the following aspects:

- Safety Components and Risk Management:

AI systems that serve as safety-critical components, such as those used in traffic management or electricity distribution, must meet elevated standards of reliability and safety. These systems are subjected to comprehensive conformity assessments, rigorous testing, and continuous post-market monitoring to ensure they operate without introducing undue risks to public welfare. - Interdependency with Existing Regulations:

CNIs are already governed by sector-specific regulations. The AI Act complements these existing frameworks by adding layers of oversight for AI-dependent processes. In transportation, for instance, any autonomous driving systems incorporated into public transit must adhere to both current safety standards and the new AI-specific requirements, ensuring that technological advancements do not compromise operational integrity. - Cybersecurity Considerations:

The enhanced integration of AI introduces additional vulnerabilities to cyberattacks. For CNIs, cybersecurity measures are therefore paramount. AI systems used in these sectors must undergo stringent cybersecurity testing and maintain robust defence protocols against hacking attempts, data breaches, and other malicious activities. - System Resilience and Interruption Management:

In cases where AI systems fail or produce erroneous outputs, immediate corrective actions and fallback procedures must be in place. This requirement ensures continuity of services for essential infrastructure and minimises potential cascading effects throughout the economy and the impacts on public and private organisations and end users.

Table: Regulatory Requirements for AI in Critical National Infrastructure

The following table outlines key regulatory expectations for AI systems deployed in critical national infrastructure:

| Aspect | Regulatory Requirement | Example Application | Relevant Provisions |

| Safety Management | Rigorous testing, risk management, conformity assessments | Autonomous transit control systems | High-risk requirements |

| Data Governance | Strict data security, documentation, continuous monitoring | Smart grid monitoring systems | EU AI Act, Annex requirements |

| Cybersecurity | Implementation of robust security protocols | Cyber-attack prevention in energy grids | Post-market surveillance |

| Continuity and Resilience | Immediate corrective actions and fallback procedures | Emergency response for railway systems | Risk management framework |

These stringent measures are designed to foster trust and ensure that the modernisation of critical infrastructure does not come at the expense of safety or national security.

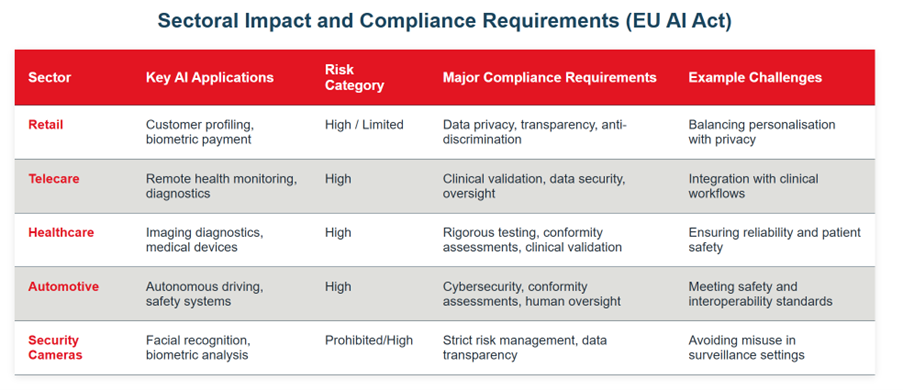

Sector-Specific Analysis

The EU AI Act’s detailed framework affects various industry sectors in different ways. This section explores the specific implications for key industries including retail, telecare, healthcare, automotive systems, and security cameras.

It is important to re-emphasise that post-market monitoring for any high-risk use cases or sectors is a key requirement of compliance and must be addressed as such via the categorisation framework.

Retail

In the retail sector, AI is widely employed for customer profiling, personalised recommendations, inventory management, and even biometric payment systems. The regulation has several specific implications:

- Customer Profiling and Recommendation Engines: Many AI-driven customer profiling systems analyse behavioral data to optimise product recommendations. However, if such systems utilise biometric data (for example, facial recognition for personalised advertising), they may be classified as high-risk due to the privacy implications and potential for misuse.

- Biometric and Emotion Recognition: Retail environments that deploy biometric categorisation systems (e.g., facial recognition in security cameras) must adhere to the strict provisions that govern prohibited practices. The AI Act prohibits systems that use manipulative techniques or exploit vulnerabilities such as age or disability. Retailers must therefore ensure that any integrated biometric technology complies fully with these regulations and maintains transparent data usage policies.

- Data Handling and Transparency: Retailers must implement robust data governance practices. Clear, accessible technical documentation and adherence to transparency requirements are vital to mitigate customer concerns regarding data privacy and AI decisions. This is particularly important in environments where real-time data is collected and processed for immediate use in targeted marketing systems or security surveillance operations.

Telecare

The telecare sector is starting to leverage artificial intelligence to enhance patient monitoring, remote diagnosis, and personalised healthcare services, all while reducing the costs of care delivery.

- Health Monitoring Systems:

AI systems that monitor vital signs, predict potential health emergencies, or manage chronic conditions fall under the high-risk category. These systems must pass rigorous testing and validation, often requiring clinical trials or a comparable process, to ensure that they function safely and effectively. - Confidentiality and Data Privacy:

As telecare systems frequently handle sensitive personal health information, robust data privacy measures are essential. Compliance with both the EU AI Act and the General Data Protection Regulation (GDPR) require that patient data be managed securely, with clear audit trails and access control mechanisms in place. - Integration with Medical Devices:

Telecare solutions increasingly integrate with medical devices designed to provide continuous care. These integrated systems are categorised as high-risk and must be thoroughly documented and certified before receiving market approval. The requirement for human oversight also applies, ensuring that medical professionals remain in control of critical decision-making processes.

Healthcare

Healthcare represents one of the most critical applications and domains for AI, where systems not only enhance the efficiency of care delivery but also have the potential to save or impact lives.

- Medical Devices and Diagnostics:

AI is already transforming healthcare through advanced imaging diagnostics, some forms of robotic-assisted surgery, and predictive analytics for disease management. Due to the directly consequential nature of these applications, such systems are classified as high-risk. They must undergo extensive clinical validation, risk assessments, and regulatory conformity processes.

For instance, AI-assisted diagnostic tools used in radiology are subject to rigorous validation to ensure accuracy and reliability, minimising the risk of misdiagnosis and subsequent adverse outcomes for patients. - Operational Efficiency vs. Patient Safety:

While AI has the potential to streamline administrative and clinical processes, the requirement for continuous human oversight requires that healthcare providers maintain control over decision-making. This balance between operational efficiency and patient safety is central to the EU AI Act’s vision for trustworthy AI in healthcare. - Data Integrity and Security:

Healthcare platforms must invest in secure data management systems that ensure the integrity and confidentiality of patient records. Given the risk of data breaches and the sensitive nature of health data, healthcare providers must deploy state-of-the-art cybersecurity measures along with robust backup and recovery protocols.

Automotive

The automotive industry is at the forefront of integrating AI into vehicles, ranging from driver assistance systems to fully autonomous vehicles. Under the EU AI Act, these AI implementations are subject to particularly high levels of scrutiny.

- Safety Components in Autonomous Vehicles:

AI systems used as safety components, such as collision avoidance systems, lane-keeping assistants, and adaptive cruise control, are explicitly categorised as high-risk. Manufacturers must perform rigorous conformity assessments, ensuring that these systems meet strict safety, cybersecurity, and performance standards.

Autonomous vehicles, which are poised to revolutionise mobility, require extensive testing and validation to ensure they can safely operate in complex and unpredictable road environments. - Cybersecurity in Connected Vehicles:

With the increasing connectivity of automotive systems, cybersecurity becomes a critical factor. AI-driven diagnostics and vehicle-to-everything (V2X) communications systems must incorporate sophisticated cybersecurity measures to prevent hacking and unauthorised access that could lead to accidents, traffic disruption or interference with the energy grid. - Integration with Mobility Ecosystems:

Automotive AI systems will increasingly interface with broader mobility ecosystems, including smart traffic management, public transit coordination, and even emergency services in some circumstances. Ensuring interoperability and seamless data flow across disparate systems is a significant regulatory challenge that requires continuous monitoring and updating of compliance processes.

Security Cameras

Security cameras and similar surveillance systems are increasingly augmented by AI capabilities, such as facial recognition, motion detection, and behaviour analysis, to enhance public and private security operations.

- Biometric Identification and Prohibitions:

The Act specifically addresses the use of real-time remote biometric identification systems in public spaces. With only narrowly defined exceptions, these applications are largely prohibited due to the high risk of infringing on fundamental rights and privacy.

Deployment of such systems in commercial settings (e.g., retail security or workplace monitoring) requires strict adherence to transparency and data protection requirements. For example, if a security camera system uses facial recognition technology, it must include clear notices that such data is being processed and ensure that the data is securely handled and ultimately deleted when no longer necessary. - Post-Event Analysis and High-Risk Oversight:

Even if real-time identification is restricted, post-event biometric analysis might be allowed under controlled circumstances, such as for law enforcement investigations. In such cases, these systems are regulated as high-risk applications, demanding stringent risk management protocols and oversight procedures.

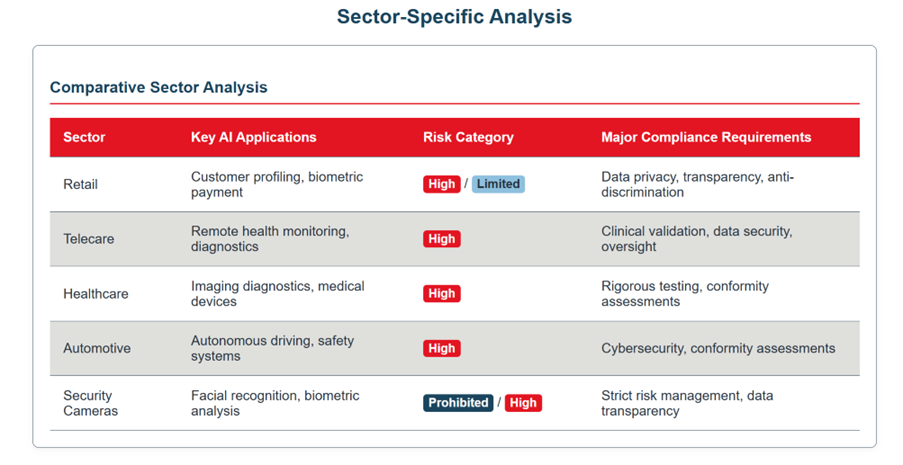

Table: Sector Specific Analysis Summary

The following table illustrates and summarises the sector specific challenges:

Compliance Challenges

While the EU AI Act establishes a visionary framework for the regulation of artificial intelligence, it also presents considerable compliance challenges for businesses and organisations alike. The following points outline some of the central hurdles to implementation:

- Technical and Financial Burdens:

The comprehensive documentation, continuous monitoring, and rigorous conformity assessments required for high-risk AI systems place a substantial financial and technical burden on companies. Smaller enterprises and startups may struggle to meet these demands without external support or strategic investments in compliance infrastructure. - Interoperability Between Regulations:

Businesses are required to align the EU AI Act with pre-existing regulatory frameworks such as the GDPR, sector-specific safety requirements, and financial oversight measures. This multiplicity of obligations can lead to regulatory fragmentation and complexity, necessitating cross-disciplinary strategies and potentially the need for collaborative efforts between industry groups. - Rapid Technological Evolution:

AI technologies, and especially generative AI and machine learning models, are evolving at a rapid pace, often outstripping existing regulatory definitions and standards. This dynamic environment makes it challenging for companies to maintain compliance, particularly when national and international policies may lag behind technological advancements. - Transparency and Data Governance:

Implementing transparency measures (such as watermarking for AI-generated content and comprehensive technical documentation) is not only a technical challenge but also requires that companies reconfigure their data governance frameworks. Ensuring that data flows across organisational and cross-border boundaries comply with the EU AI Act can demand significant restructuring of internal systems. - Human Oversight and Accountability:

Another compliance challenge is the requirement for human oversight throughout the AI system lifecycle. Businesses must establish processes that ensure qualified human intervention is integrated into automated decision-making processes, which may involve restructuring workflows and training personnel. - International Cooperation and Cross-Border Implications:

For multinational enterprises, the need to align with both EU regulations and standards in other regions (such as the U.S. or Asia) can create operational uncertainties. The EU AI Act’s focus on harmonised data flows and international cooperation calls for a coordinated approach, potentially affecting global supply chains and collaborative innovation efforts that will need to be understood and addressed.

Conclusion

The EU AI Act represents a pioneering legislative effort that sets a high bar for the governance of artificial intelligence. Its risk-based approach categorises AI systems into unacceptable, high, limited, and minimal risk levels, thereby defining clear regulatory pathways for each application. While the Act offers a structured framework and significant penalties, it also imposes substantial compliance challenges that demand significant investments in technical infrastructure, data governance, and human oversight strategies.

Key Findings

- Comprehensive Regulatory Framework:

The EU AI Act is the first binding global regulation on AI, establishing clear categories based on risk and imposing strict obligations on high-risk systems. - Risk-Based Approach:

Unacceptable risk applications are banned; high-risk systems must meet rigorous standards and limited-risk systems require a strong focus on transparency. - Implications for Business:

Companies must implement robust internal processes for risk categorisation, technical documentation, data governance, and continuous monitoring. Financial institutions and other regulated sectors must align AI compliance with and beyond existing sector-specific legislation. - Impact on Critical National Infrastructure:

AI applications in domains such as energy grids, transportation networks, and safety-critical systems are classified as high-risk, requiring thorough conformity assessments, post-market monitoring, and robust cybersecurity measures. - Sector-Specific Considerations:

- Retail: Systems must balance the use of biometric and emotion recognition technologies with strict data privacy and transparency requirements.

- Telecare and Healthcare: Demand rigorous clinical validation, data protection, and high levels of human oversight due to the sensitivity of medical applications.

- Automotive: Autonomous vehicle systems and connected vehicle technologies are subject to critical safety and cybersecurity standards to protect users and the public.

- Security Cameras: Systems utilising biometric identification risk prohibitions or strict high-risk regulations, to ensure that surveillance practices do not compromise individual rights.

- Compliance Challenges:

Significant technical, financial, and administrative challenges exist, particularly for SMEs. The fast pace of AI innovation and the need for seamless interoperability with existing regulations amplify these challenges, while ensuring human oversight remains a central and key requirement of the Act. - Across all sectors, it is critically important to implement systems that recognise that high-risk systems require continuous post-market monitoring as an explicit legal requirement under the Act.

Forward Look

The EU AI Act sets a global precedent and is likely to inspire further harmonisation in AI governance around the world. Businesses, critical infrastructure operators, and industry-specific stakeholders must prepare proactively to meet these new regulations. As technological advancements continue, aligning internal strategies with evolving regulatory mandates will become increasingly paramount in organisational structures, practices, and operational domains. The success of the EU AI Act in fostering innovation while safeguarding fundamental rights will depend largely on the ability of both regulators and businesses to collaborate and adapt in a dynamic global landscape where technology is rapidly and globally advancing.

In summary, the EU AI Act is a landmark regulation that calls for immediate and sustained efforts in compliance and operational realignment. Companies poised to lead in the AI-driven economy must recognise the regulatory challenges and invest in creating robust systems that ensure transparency, accountability, and safety across all AI applications.

It is also important to note that as of 2025, the EU is also drafting harmonised standards to support AI Act implementation, and so, details of technical requirements may evolve further.

Appendices

Appendix A: Figures

Figure 1: Timeline of Implementation

Figure 2: Risk Categorisation of AI Systems

This table summarises the categorisation of AI systems as outlined in the EU AI Act, illustrating the regulatory requirements and examples for each risk category.

Figure 3: Comparative Analysis: Sectoral Impact and Compliance Requirements

The table below contrasts key factors affecting several sectors impacted by the EU AI Act, highlighting major considerations and regulatory focus areas.

Appendix B: Global Implications of the Act

The EU AI Act is a pioneering regulatory framework setting a global benchmark for AI governance. By categorising applications by risk and prohibiting practices that threaten fundamental rights, it seeks to balance innovation with citizen protection.

For businesses, this requires robust compliance systems, risk categorisation, documentation, transparency measures, and continuous human oversight, challenges especially acute for SMEs and highly AI-reliant sectors.

As AI becomes embedded in critical infrastructure and sectors such as retail, healthcare, and automotive, elevated regulatory requirements will demand careful planning that assess the global and cross border implications.

The Act’s global impact will depend on collaboration between regulators and businesses to adapt risk assessments, maintain public trust, and foster responsible innovation.

Appendix C: Additional Regulatory Notes:

- Implementation Timeline: The AI Act was formally adopted in mid-2024 with staged entry into force. Many obligations for high-risk providers phase in over 2–3 years.

- Biometric Identification: Real-time remote biometric identification in public spaces is banned except for narrowly defined law enforcement uses. Post-event biometric identification is regulated as high-risk.

- GPAI Transparency Obligations: High-impact GPAI models (e.g. exceeding ~10^25 FLOPs[1] or meeting systemic risk criteria) must meet strict transparency and risk management requirements, even below the threshold.

- AI-Generated Content: Providers placing AI-generated or manipulated content on the market must ensure clear labelling to inform users.

[1] A FLOP (Floating-Point Operation) is a single calculation involving decimal numbers, commonly used to measure the total computational workload of training an AI model. The EU AI Act uses a threshold of ~10²⁵ FLOPs to identify “high-impact” General-Purpose AI models that require additional transparency and risk mitigation obligations, recognising that such large-scale models may pose systemic risks.

References

- Alvarado, A., 2025. Lessons from the EU AI Act. Patterns, 6(2).

- Ballell, T.R.D.L.H., 2025, January. Mapping Generative AI rules and liability scenarios in the AI Act, and in the proposed EU liability rules for AI liability. In Cambridge Forum on AI: Law and Governance(Vol. 1, p. e5). Cambridge University Press.

- European Commission. (2024). The Artificial Intelligence Act: Q&A.

- European Parliament and Council of the EU. (2024). Regulation (EU) 2024/… on Artificial Intelligence (AI Act).

- European Parliamentary Research Service. (2023). EU Artificial Intelligence Act: Comprehensive regulatory framework for AI in the EU.

- European Commission. (2021). Proposal for a Regulation Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act), COM(2021) 206 final.

- Palmiotto, F., 2025. The AI Act Roller Coaster: The Evolution of Fundamental Rights Protection in the Legislative Process and the Future of the Regulation. European Journal of Risk Regulation, pp.1-24.

- Passador, M.L., 2025. AI in the Vault: AI Act’s Impact on Financial Regulation. Loyola University of Chicago Law Review.